Introduction

Most of us will never be professional musicians, even though we have the musicality and the motor ability to achieve it. Of course, this is primarily due to coincidences, culture and personal preferences, but it is also dependent on our motor abilities. Most musical instruments demand precise and fast motor control, which not all have. Of course, a musician will train up with thousands of hours of practice. However, some people I have talked with were hindered from even starting up by playing the instruments they wanted because of their motor skills. This made me reflect on this question: would it be possible for me to create musical instruments that were engaging and challenging to play, but at the same time possible to play with only gross motor skills? How is it possible to design and implement accessible musicking technologies controlled with motion in the air?

Accessibility

Accessibility in digital music instrument design is not a new thing. There are already instruments and interactive music systems used in music therapy and aimed at being accessible for people with disabilities. Emma Frid (2018) researched the trends and solutions of accessible digital musical instrument design in NIME, SMC and ICMC conferences over the past 30 years. The most common approach was to create tangible controllers, which were used in 13 of 30 cases, like an electronic hand drum designed for music therapy sessions. Non-tangible solutions, like air instruments, was the second most common approach with five of 30 papers (Frid, 2018).

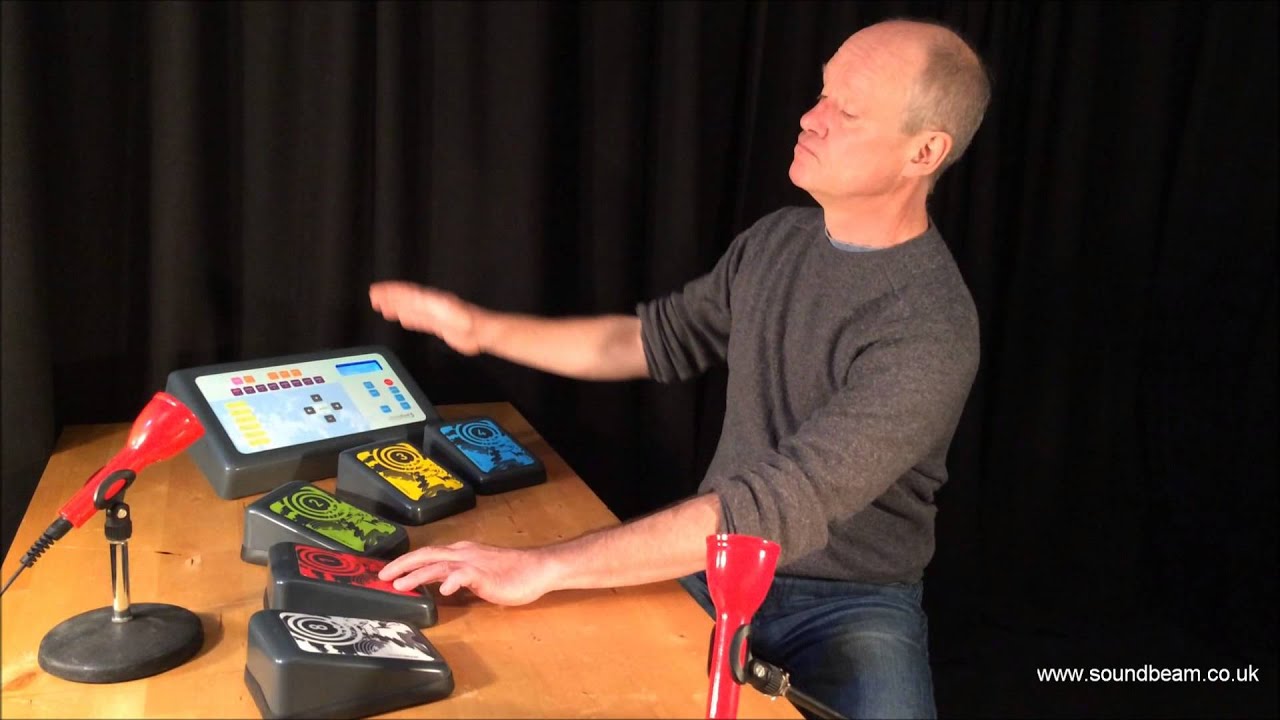

Some examples of musical instruments that have been designed to be inclusive to people with physical disabilities are Soundbeam and Motion Composer. Typical for these two instruments is that they are based on motion in the air. Motion Composer uses complicated video tracking technology, while Soundbeam uses ultrasonic sensors to track motion. Both systems are used in music therapy, and Soundbeam claims that their instruments have been thoroughly tested and evaluated on their webpage - especially in use cases for children with learning difficulties.

However, both Soundbeam and Motion Composer are commercial products, and at the time of writing this blog post, a Soundbeam 6 costs about 100 000 NOK (about 10000 EUR). In other words, a person with a regular income can't obtain such a thing. A Motion Composer is not possible to get your hands on (yet?), at least not from what I can understand from their german webpage.

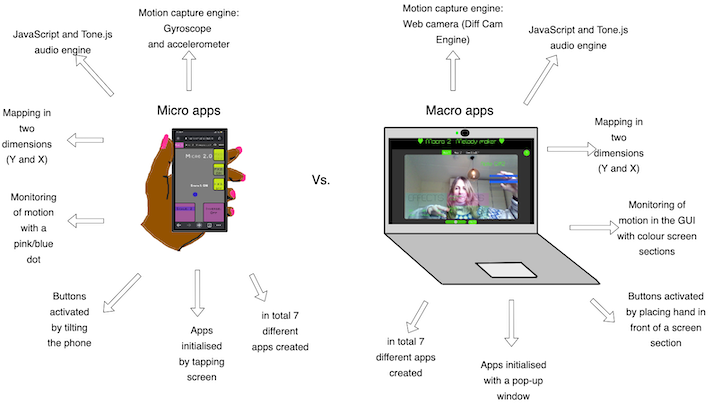

All of this made it clear that the world needs a web-based musical instrument that can be controlled with motion in the air. There are several ways to do this. However, I chose to try out two different approaches: using a web camera and using a mobile phone with accelerometer and gyroscope sensors. Both approaches have two apps each, so I have developed four apps. However, to reach this stage, the prototypes have been through several development steps with a collection of user feedback in each stage.

The evolution of the Macro apps

The Diff Cam Engine

The Diff Cam Engine is an open-source core engine that enables motion-detecting in JavaScript, created by Will Boyd. As I had learned audio programming in JavaScript through one of the MCT courses, I decided to start on the path of creating motion-detecting web apps for musicking. Very quickly, I found out how to use this technology to create sound:

Macro 1.0

As you can see from the video above, the latency between action and sound was pretty big. So the next step was to lower the latency, make the system more interactive and improve how it sounded. This led to the first official version, released with a feedback form that asked people to test out the apps and come with feedback. This version was called Macro 1.0 and was named after the Macro level, one of the three "spatiotemporal levels of human action" (Jensenius, 2017) (this will make more sense in the next section when I describe the Micro apps).

Macro 1.0 consists of App 1, App 2 and App 3. They are all based on web camera motion detection but have different approaches for musical interaction. In the video below, you can see a demonstration of Macro 1.0, or try it out yourself from this link.

Macro 2.0

I took the system further with the second release by introducing a button-free design. The intention was to make the instrument more accessible by avoiding fine motor skill dependent actions like clicking a button with a mouse. This version includes only two apps, as app 2 and app 3 from Macro 1.0 was combined into one app. Try out the apps on this link:

Macro 2.1

The last iteration took all the feedback and tried to improve the instructions, the interaction and the visuals of the apps. App 1 is a Theremin-like musical instrument where you can control pitch on the Y-axis (vertical) and select between two effects and two instruments on the X-axis (horizontal). This iteration has fewer functionalities than the previous iteration, as feedback from users showed that people had problems with unintended output. Still, it is a problem that the app interprets all movement as input, and the next step will be to teach the system to separate between different kinds of gestures.

App 2 is a random music generator, where the user can interact by turning instruments on and off and by applying effects (on the Y-axis). The web-app Synastheasia inspired me to create a system for random music generation.

You are welcome to try out the apps from this link. Check out the video below to see a demonstration of Macro 2.1 app 1 and app 2:

The evolution of the Micro apps

At about the same time I was starting to create the Macro apps, I was developing the Micro web apps for the MICRO project at RITMO Centre for Interdisciplinary Studies in Rhythm, Time and Motion at the University of Oslo. The apps were developed as air instruments and explored micromotion using accelerometer and gyroscope sensors on smartphones with JavaScript and Web Audio API. I decided to develop further on the apps as a part of this Master's thesis.

Micro 1.0

The first release of the Micro apps included three different apps with different approaches for exploring sound and music. The apps have their own wiki in which all of the three apps are explained. You can also watch this demonstration video which describes the apps:

Micro 2.0 and Micro 2.1

With the second iteration of the Micro apps, there was a goal to remove the buttons and find a way to make the instrument entirely touch-free. This was achieved by implementing a way to make the user "hover" the buttons with a blue dot that monitored the phone's motion. In this way, the user could control melodies and musical parameters by tilting the phone and activating buttons by tilting the phone in different angles. However, it was impossible to avoid buttons entirely, as the accelerometer and gyroscope sensors had to be activated by a touch action. The solution was to let the button fill the whole screen.

In app 2 of the 2.0 release, the same random groove generator as in Macro 2.0 app 2 was introduced. In the Micro 2.1 release, most functionalities were the same as in the 2.0 version, but the visuals and some of the sound design were improved.

The video below shows a demonstration of the Micro 2.1 app 1:

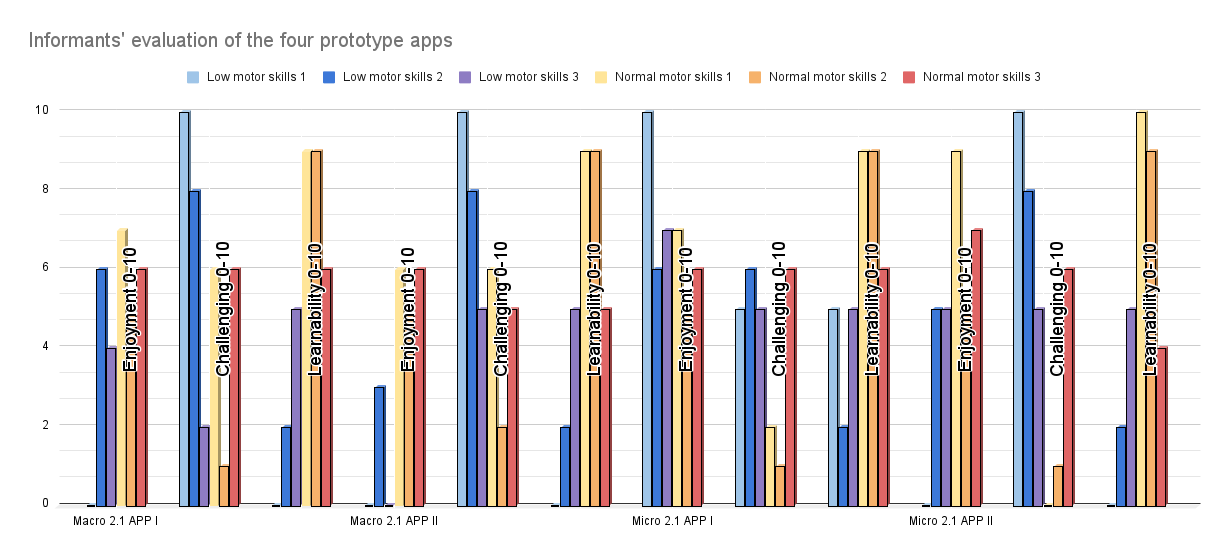

User studies, reflections and further research

The goal of this Master's thesis has been to create accessible web-based musicking technologies, which meant that it was essential to test out how accessible the prototypes were. To narrow down the focus I had from the beginning decided to focus on people with low fine motor skills. I was lucky and got in contact with three informants who had low fine motor skills, and as a control group, I invited three informants with normal motor skills. The main findings from the studies showed that people with low fine motor skills and normal motor skills could interact with the prototypes on almost the same level. Both groups could explore sound and music with the apps; however, both groups encountered problems with the Macro apps creating unintended sound by interpreting all motion as input. The informants with low fine motor skills generally self-reported less enjoyment with the apps than the group with normal motor skills.

The Micro 2.1 app 1 seemed to be the most enjoyed apps among the users. Interestingly, it was shown that the apps were probably less accessible for the users without touch and mouse functionality than they would have been if touch was implemented. Even though the users reported low fine motor skills, they were used to touch and mouse technology and found this easier than making gestures in the air. The exception was one of the three users who said that tilting the phone to activate buttons was easier for him than clicking a button on the mobile screen.

However, only three users with low fine motor skills are not enough to generalize or make any clear conclusions. More development and research should be done to investigate further on this technology. The apparent advantage of developing web-based software is that it is made readily available and accessible to many people. These days with pandemic lockdowns, we are again prevented from seeing each other. The development of online motion tracking musicking technologies might be valuable for future research and artistic experience.

The most important findings from the user studies were that the musicking technologies should adapt and optimize to the individual to offer accessibility for people with low fine motor skills. For many people, touch technology might increase accessibility and can be used in addition to motion-tracking technology. Based on other accessible instruments as Motion Composer and Soundbeam, it is likely to assume that the air instrument approach still has excellent potential for accessibility. The technology developed in this thesis offers tremendous potential for improvement. More precise and complicated mappings can be implemented when mobile and computer cameras are improved, e.g. the option for 3D interpretation of the video stream. Implementing image recognition and machine learning within the web camera-based apps can help distinguish between different movements.

Links:

- Web page of the project

- The Master's thesis pdf

- Macro 2.1 github repository

- Macro 2.0 github repository

- Macro 1.0 github repository

- Micro 2.1 github repository

- Micro 2.0 github repository

- Micro 1.0 github repository

- Macro 2.1 web-app

- Macro 2.0 web-app

- Macro 1.0 web-app

- Micro 2.1 web-app (must be opened on a smartphone)

- Micro 2.0 web-app (must be opened on a smartphone)

- Micro 1.0 web-app (must be opened on a smartphone)

References

- Frid, E. (2018). Accessible digital musical instruments-a survey of inclusive instruments. In Proceedings of the International Computer Music Conference (pp. 53-59). International Computer Music Association.

- Jensenius, A. R. (2017). Sonic Microinteraction in "the air". In M. Lesaffre, P.-J. Maes & M. Leman (Eds.), The Routledge companion to embodied music interaction (pp. 429-437). Routledge.