UPCOMING: MIRAGE Closing Seminar: Digitisation and computational analysis of folk music – Apr. 26, National Library of Norway, Oslo

About the MIRAGE project

Read the article: "Artificial intelligence can help you understand music better"

NEW: Short description of the MIRAGE project: "Advancing AI for music analysis and transcription"

The objective is to generate rich and detailed descriptions of music, encompassing a large range of dimensions, including low-level features, mid-level structures and high-level concepts. Significant effort will be dedicated to the design of applications of value for musicology, music cognition and the general public.

We extend further the design of our leading computational framework aimed at extracting a large set of information from music, such as timbre, notes, rhythm, tonality or structure. Yet music can easily become complex. To make sense of such a subtle language, refined musicological considerations need to be formalised and integrated into the framework. Music is a lot about repetition: motives are repeated many times within a piece, and pieces of music imitate each other and cluster into styles. Revealing this repetition is both challenging and crucial. A large range of musical styles will be considered: traditional, classical and popular; acoustic and electronic; and from various cultures. The rich description of music provided by this new computer tool will also be used to investigate elaborate notions such as emotions, groove or mental images.

The approach follows a transdisciplinary perspective, articulating traditional musicology, cognitive science, signal processing and artificial intelligence.

This project is also oriented towards the development of groundbreaking technologies for the general public. Music videos have the potential to significantly increase music appreciation. The effect is increased when music and video are closely articulated. Our technologies will enable to generate videos on the fly for any music. One challenge in music listening is that it all depends on the listeners’ implicit ear training. Automated, immersive, interactive visualisations will help listeners (even hearing-impaired) understand and appreciate better the music they like (or don’t like yet). This will make music more accessible and engaging. It will be also possible to visually browse into large music catalogues. Applications to music therapy will also be considered.

This project is in collaboration with the National Library of Norway, world leading in digitising cultural heritage. Check out the National Library of Norway’s Digital Humanities Laboratory.

Objectives

The fundamental research question of MIRAGE is:

- How to design a computational system that would generate detailed and rich descriptions of music along a large range of dimensions?

A number of sub-questions arise from this:

- What kinds of music analysis can be undertaken using this new computational technology, that would push musicology forwards towards new directions?

- How can music perception be modelled in the form of a complex system composed of a large set of interdependent modules related to different music dimensions?

- What can be discovered in terms of new predictive models describing listeners’ percepts and impressions, based on this new type of music analysis?

General methodology

The envisioned methodology consists of close interaction between automated music transcription and detailed musicological analysis of the transcriptions.

Methods based on mathematical analysis of audio recordings are not able to catch all the subtle transformations at work in the music. Most listeners, even if they are not musicians nor musicologists, are able to follow and understand the logic of the music because they more or less consciously build a somewhat refined analysis of the music. For these reasons, we advocate a different approach that attempts to construct a highly detailed analysis of the music in order to grasp some of the subtler aspects and to reach a higher degree of understanding. Besides there is a high degree of interdependency between these different structural dimensions. Any particular dimension of music cannot be understood fully without taking into consideration the other dimensions as well. For instance, the “simple” task of tracking beat actually requires to be able to detect repeating motifs, because beat perception can emerge from successive repetitions. But in the same time, motivic analysis requires a rich description of the music, which includes rhythm. We see therefore a circularity in the dependencies that can be addressed only by considering all these aspects altogether while progressively analysing the piece of music from beginning to the end.

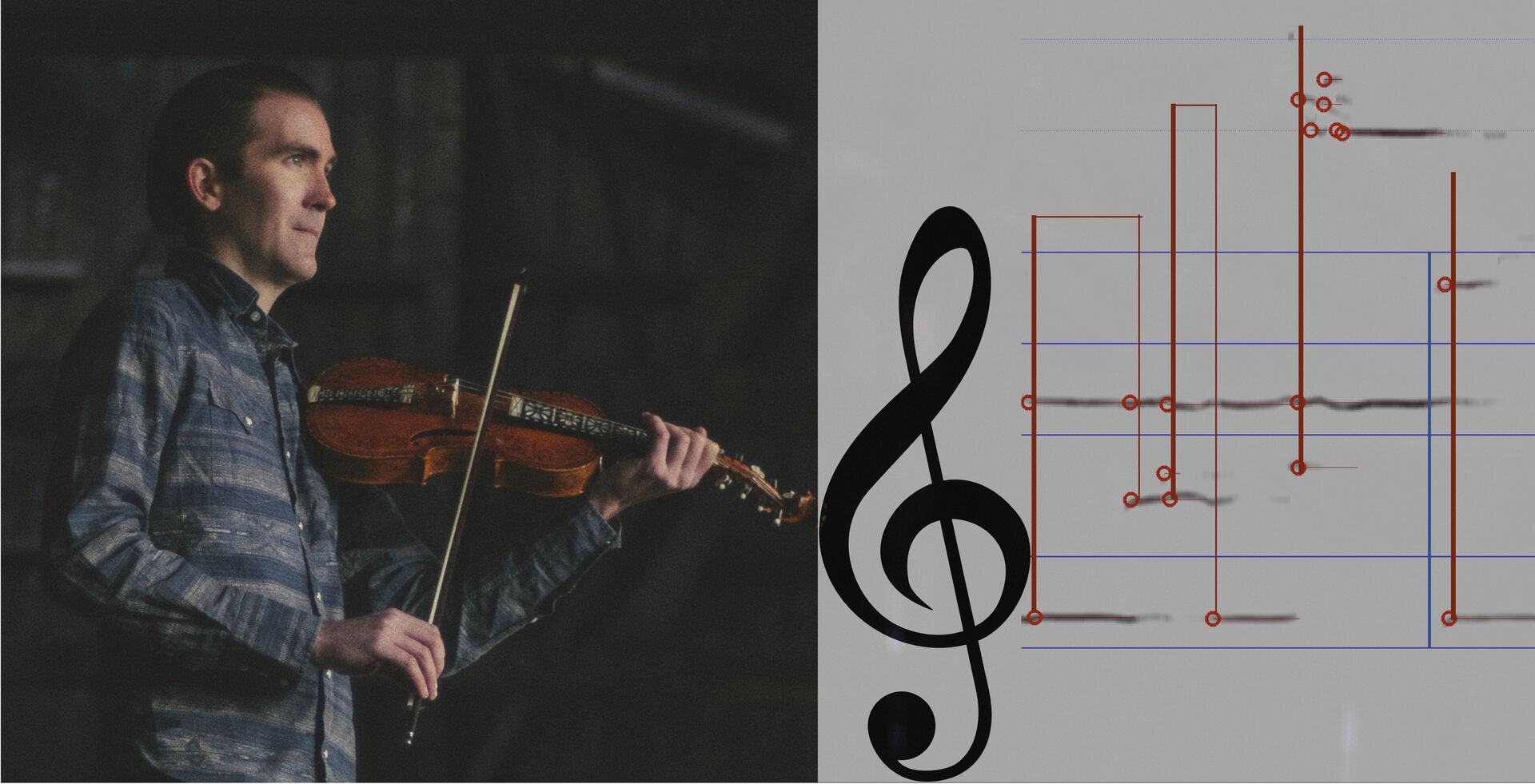

Hence, whereas a traditional audio-based approach would typically apply signal processing, machine learning or statistical operators directly to the audio recording, the proposed approach relies on the contrary on a transcription of the audio signal into a music score and an AI-based detailed analysis of the score grounded on musicological and cognitive considerations.

Structure

The project is organised into five workpackages:

- WP1: New Methods for Automated Transcription: Detection of notes from audio, construction of the score based on higher-level musicological analysis (provided by WP2). Tests on a large range of music from diverse cultures and genres. Through a close collaboration with musicologists, traditional music from Norway and many other cultures around the world will be considered. Popular and art music from the 20th and 21st centuries will also be studied, in particular music using particular instrumentarium or particular performing techniques, as well as electro-acoustic music, challenging further the task of transcription by questioning the basic definitions of “notes” or musical events and their parameters.

- WP2: Comprehensive Model for Music Analysis: Modelling of the musicological analysis of the (transcriptions of audio recording using WP1, MIDI files, etc.) along a large range of musical dimensions. Each musical dimension is modelled by a specific module. The complex network of interdependencies between modules is also investigated. Musicological validation following the same principles and plan as in WP1.

- WP3: New Perspectives for Musicology. This WP aims at transcending musicology’s capabilities through the development of new computational technologies specifically tailored to its needs. Three topics:

- Maximising the informativeness of music visualisation

- Retrieval technology tailored to musicological queries

- Unveiling music intertextuality

- WP4: Theoretical and Practical Impacts on Music Cognition. We take benefit of the new analytical tool to enrich music cognition models. Theoretically, in the way the computational models conceive in this project can suggest blueprints for cognitive models. Practically, because a better description of music enables a richer understanding of its impact on listeners. Extending further the momentum gathered by our previous software MIRtoolbox in the domain of music cognition, the new computational framework for music analysis will be fully integrated in our new open source toolbox MiningSuite. One particular application consists in enriching predictive models formalising the relationships between musical characteristics and their impact in listeners’ appreciation of music. Will be considered in particular music shape and mental images, groove and emotions.

- WP5: Technological and Societal Repercussions. This WP examines the large range of possible impacts of the research, with a view to initiate further research and innovation projects and networks. Three axes:

- Valorisation of online music catalogue: As a continuation to the SoundTracer project, we will prototype apps allowing the general public to browse into the Norwegian folk music catalogue, understand the characteristics of the different music recordings, interactively search for particular musical characteristics of their choices, such as melodies or rhythmical patterns, and get personalised recommendations based on the users’ appreciation of the tunes they have already listened. Contrary to Spotify of Apple Music, for instance, where songs are compared based on users’ consumption, here music will be compared based on actual musical content as found by the analyses produced in WP2.

- Impact to the general public: The objective of this task is to compile a detailed list of possible applications of the developed technologies to the general public. We will imagine for instance how new methods of music visualisations will help non-expert understand better how music works and appreciate the richness of music more deeply. We will also investigate how these new technologies might be used for instance as a complement to traditional music critique or to music videos. We will prototype examples of visualisations that will be published in mainstream music or technology magazines. The new capabilities of music retrieval, catalogue browsing and recommendations offered by these new technologies will be studied on various music catalogues. The final objective is to initiate new research and innovation projects around those topics.

- Music therapy tools: We will also prototype music therapy applications of our technologies. In particular, we will extend further our Music Therapy ToolBox (MTTB), dedicated to the analysis of free improvisations between therapists and clients, with the integration of visualisations related to higher-level music analysis. Here also, further applications will be envisaged for future research and innovation proposals.