Standalones

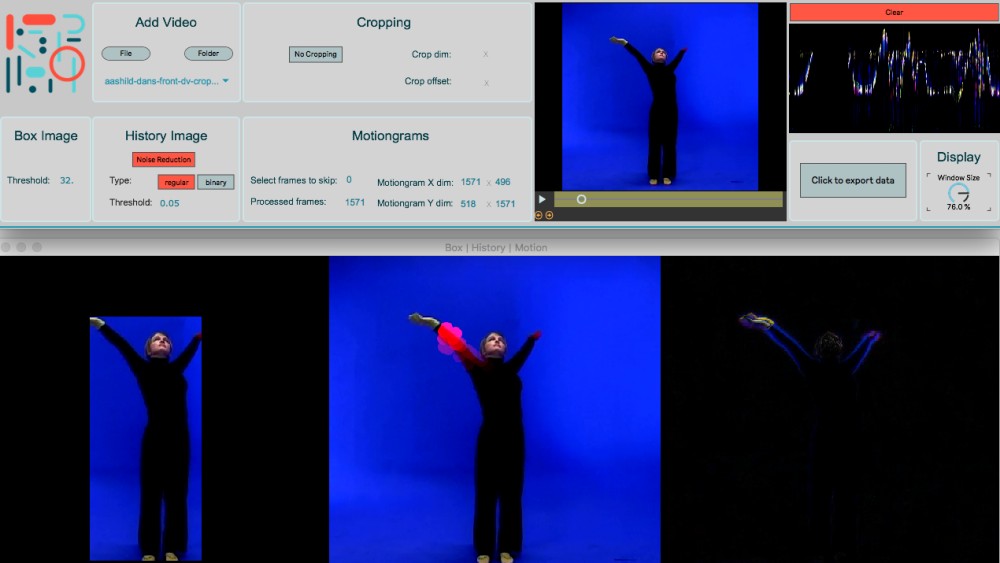

If you want a simple solution for creating video visualizations, check out VideoAnalysis (non-realtime) and AudioVideoAnalysis (realtime). These are standalone apps for OSX and Windows. The toolboxes below all require that you know some programming (Max, Matlab, Python, Bash).

Musical Gestures Toolbox for Python

The Python port of MGT is the latest edition and the one in most active development. Most of the functionality of the Matlab version has been ported. It can be run from Jupyter Notebook, making it useful in education.

Musical Gestures Toolbox for Matlab

The Matlab port of MGT contains a large collection of video visualization functions, batch processing, and server processing. It also integrates with the MIR Toolbox and MoCap Toolbox.

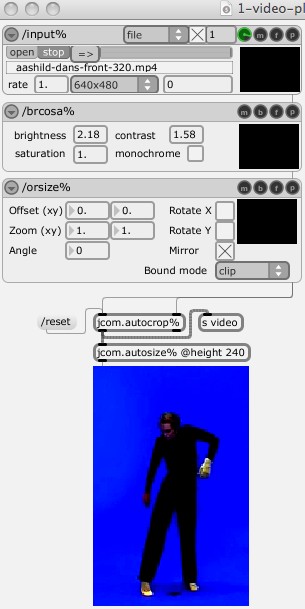

Musical Gestures Toolbox for Max

The original MGT was a collection of real-time modules for the graphical programming environment Max/MSP/Jitter. The toolbox is currently distributed as part of the Jamoma package. It can run in real-time mode, which makes it useful for music and dance performances.

Musical Gestures Toolbox for Terminal

This is an experimental version of MGT aimed at exploring the functionality of FFmpeg and ImageMagick. As the code gets more developed it is slowly implemented in MGT for Python.

This is an experimental version of MGT aimed at exploring the functionality of FFmpeg and ImageMagick. As the code gets more developed it is slowly implemented in MGT for Python.

Learn more

History

The Musical Gestures Toolbox has been developed at the University of Oslo since 2004. It started out as a patcher made by Alexander Refsum Jensenius for the graphical multimedia programming environment Max/MSP/Jitter. The collection grew and was merged into modules and components in the Jamoma project. The modules have been used in a number of music and dance performances over the years.

When the course MUS2006 Music and Body Movements started up at University of Oslo in 2009, it was necessary to provide the non-programming students with an easy solution for creating video visualizations. The result was the standalone app VideoAnalysis, which saw a big overhaul in 2020.

For scientific usage, functions from the original toolbox were ported to MGT for Matlab in 2015 and MGT for Python in 2019. With the need to run some code on servers, there is now also a more limited version of MGT for Terminal. All of these scripting-based toolboxes are slightly different in implementation, but they share the vision of creating powerful, yet fairly simple to use, operations for video visualization.

The software is maintained by the fourMs lab at RITMO Centre for Interdisciplinary Studies in Rhythm, Time and Motion at the University of Oslo.

Publications

- Laczkó, Bálint & Jensenius, Alexander Refsum (2021). Reflections on the Development of the Musical Gestures Toolbox for Python. In Kantan, Prithvi Ravi; Paisa, Razvan & Willemsen, Silvin (Ed.), Proceedings of the Nordic Sound and Music Computing Conference. Aalborg University Copenhagen.

- Jensenius, A. R. (2018). Methods for studying music-related body motion. In R. Bader (Ed.), Handbook of Systematic Musicology (pp. 567–580). Springer-Verlag.

- Jensenius, A. R. (2018). The Musical Gestures Toolbox for Matlab. Proceedings of the International Society for Music Information Retrieval, Late-Breaking Demos.

- Jensenius, A. R. (2014). From experimental music technology to clinical tool. In K. Stensæth (Ed.), Music, health, technology, and design. Norwegian Academy of Music.

- Jensenius, A. R. (2013). Some video abstraction techniques for displaying body movement in analysis and performance. Leonardo, 46(1), 53–60.

- Jensenius, A. R. (2013). Non-Realtime Sonification of Motiongrams. Proceedings of Sound and Music Computing, 500–505.

- Jensenius, A. R., & Godøy, R. I. (2013). Sonifying the shape of human body motion using motiongrams. Empirical Musicology Review, 8(2), 73–83.

- Jensenius, A. R. (2012). Evaluating How Different Video Features Influence the Visual Quality of Resultant Motiongrams. Proceedings of the Sound and Music Computing Conference, 467–472.

- Jensenius, A. R. (2007). Action–Sound: Developing Methods and Tools to Study Music-Related Body Movement [PhD thesis, University of Oslo].

- Jensenius, A. R. (2006). Using motiongrams in the study of musical gestures. Proceedings of the International Conference on New Interfaces for Musical Expression, 499–502.

License

All the different versions of the Musical Gestures Toolbox are open source, and are shared with The GNU General Public License v3.0.